Having enjoyed many projects on my home PC and Raspberry Pi, I am now taking advantage of AWS’s trial free-tier to see how far I can get on a web app project I have in mind. Below are some of my initial observations.

Free Tier Hours

I was aware that AWS instances are billed rounded up to even hours. An instance running for 1 minuet therefore counts for one hour. Shutting down one instance and starting another a minuet or so later means both bill that hour. Doing this 10 times in a 24-hour period will result in 34 hours of instances billed even though less than a total of 24-hours actual run-time was consumed.

Knowing that the free-tier has just enough credits to run a t2.micro instance constantly I chose to shut-down my instance over the weekend. My reasoning being it would save me 48-hours of instance run-time, for provisioning different instances when needs arise without worrying about going over the free-tier limits.

Lesson on CPU Credits

I had however not understood what CPU credits are in AWS before starting this. If I had, I would have worked a little differently.

As stated in the documentation:

One CPU credit is equal to one vCPU running at 100% utilization for one minute.

The t2.micro instance used in the free tier accrues 6 CPU credits per hour and can hold a maximum balance of 144 credits.

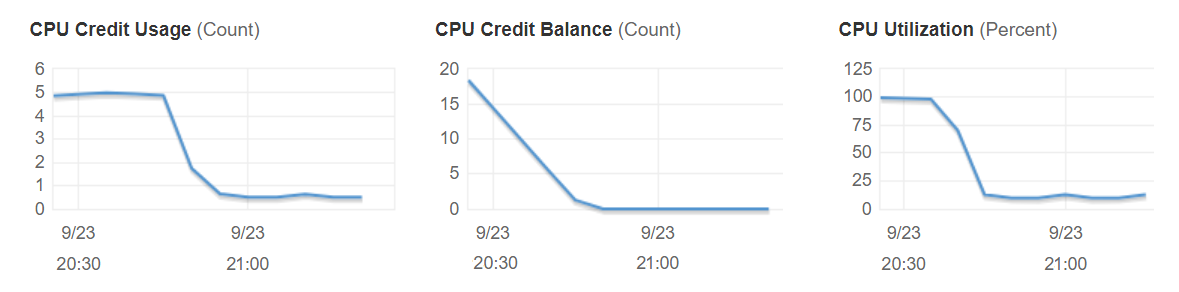

When I resumed my instance on Monday evening it had a balance of only 20 CPU credits, meaning 20 minutes of 100% CPU runtime as depicted in the below charts.

The left of the three plots above show CPU credits being used constantly for the first 20 minutes at a rate of 5 credits per whatever-that’s-measured-by, causing the CPU credit balance to plummet until all are gone. Once all are gone, CPU Credit usage drops off, but with it so does CPU utilisation.

When the instance runs out of credits, it becomes capped at its base performance which for the free-tier’s t2.micro instances is 10% CPU utilisation. I was able to install simple R packages but slow and little else. I did not get much done this day. Silver lining it gave me time and inclination to write this blog post.

Comparisons vs Raspberry Pi?

I built the first version of my app testing it on a Raspberry Pi 3b and feel compelled to share comparisons.

Building on a Raspberry Pi has a huge disadvantage in that many applications (in my case R and Shiny Server) need to be compiled from source. Shiny Server was the biggest time-hog here taking around 12-hours. I chose to VNC into the Pi’s desktop to run this as to not risk the ssh session disconnecting and so did not even need to keep my laptop on whilst this was compiling on the Pi.

In reality it takes a couple of days to build and install the software my web app requires on a Pi. By comparison it can be done in a few hours on an AWS instance, however it can take a few days to accrue sufficient CPU credits to get this runtime.

I wish I kept better records of how long this all took, my anecdotal experience shows t2.micro instances are looking pretty equivalent to Raspberry Pi 3bs. And if you only use applications that are part of Raspbian’s repo the Pi should be a sure winner.

Estimated Performance Comparison

Running lscpu reveals my instance is on an Intel(R) Xeon(R) CPU E5-2676 v3 @ 2.40GHz. Lack of information on this CPU suggests is a bespoke SKU to AWS meaning I can only estimate performance figures for it. The host server will have hyperthreading enabled so my one vCPU will be only one 24th of the physical chip’s performance. Remembering CPU credits is a limiter, we must model the instance as having 6 minutes in the hour at 100% of a vCPU, and the remainder at 10%.

The t2.micro instance works out to be approximately one 250th of a dual-socket server.

| T2.micro (estimated*) | Raspberry Pi 3b | |

| Sustained peak performance | 3.5 GFLOPS | 4.8 GFLOPS |

| Linpack | 3.2 GFLOPs | 3.1 GFLOPS** |

*this is average sustainable over an hour, not measured.

**bare board, no heat-sink.

The results are shockingly close. In future I would consider the cost effectiveness of hosted Raspberry Pi. A cluster of Pis would give some resiliency and good scalability although it would significantly increase the infrastructure complexity. A key advantage of AWS in my mind is they do the infrastructure, I just make stuff on it.

Today I Learned:

Lots. Today was a good day.

Managing instance run-time is important to sticking within budgets, but when using t2 “burstable” instances it is also important to manage CPU credits.

Were I to start again, I would not shutdown my free-tier instance for the whole weekend. I may still shut it down to save the hours but I would resume it on the Sunday to allow it to accrue it’s maximum CPU credit balance for building on the Monday. Similarly for new free-tier instances, I would try to start them a good number of hours early if foresight permits.

The EC2 credits on AWS free-tier are limited to 12-months, meaning for future projects I may have to pay for all instances. Here I use the t2.micro as it is the only one on the free-tier, however it is apparent that burstable t2 instances are not well suited to building on. Next time I would compare the effective cost of building on a non-burstable instance such as a1.medium. I may build on this, capture an image, and then deploy on t2 as a cheap run-time environment.

This is the tip of the iceberg. You could make a career of profiling workloads and matching them to the appropriate AWS product(s). For companies already spending big on AWS the savings could be huge, immediate and non-disruptive.