Police forces in the UK have recently been criticised for using facial recognition systems some claim to be “dangerously inaccurate”. The House of Commons Science and Technology committee recently called for trials to be suspended until relevant regulations are put in place. Some of these systems have demonstrated just 5% accuracy.

I do not believe 5% is necessarily the bad part of this story.

The Size Instinct

When talking about accuracy, 5% is an emotive number. Emotive as we all know 5% is not high and that causes us to instinctively think it is “bad”. Subjectively there is not enough information to make this link but objective instincts are hard to override. The late Hans Rosling put it better than I can:

“Never, ever leave a number all by itself. Never believe that one number on its own can be meaningful. If you are offered one number, always ask for at least one more.”

Rosling, Hans. Factfulness: Ten Reasons We’re Wrong About The World – And Why Things Are Better Than You Think.

Were you able to predict roulette wheel spins with a 5% accuracy you would be a rich person*. Your odds of picking the right number by chance are 2.7% (2.63% on a 00 American wheel), if you picked the right number 5% of the time, over enough spins your 35:1 return will start to cover the 95% of times where you are wrong.

*Well either you’d be a rich person or a very unfortunate poor person

Accuracy Metrics

Is 5% good or bad here? We need to break down accuracy a little more. Some inaccurate predications can be more harmful than others. We need to break down accuracy into true positives, true negatives, false positives, and false negatives.

In medical screenings a false positive may cause unnecessary stress in a patient, and extra disturbance of thorough follow up tests. But a false negative can kill you. In cases like these a compromise needs to be reached to deliver the greatest good irrespective of absolute accuracy.

I won’t profess to know the intentions of the police’s recognition systems but 5% accuracy might be a fair compromise, and a “good” accuracy.

The Bad Part

The Snowden leaks showed the world we are being monitored, logged, and where necessary audited. Some protested this but the overwhelming majority choose to ignore and see no impact on their lives.

Personally I’m fine with GCHQ/NSA monitoring us. As I see it my data is only to be seen by operatives who looks at thousands of different people’s records, who don’t know me, and won’t remember me. We have anonymity here as they simply don’t care what our names are.

It becomes different when the technology is rolled out. No longer is it unknown spooks behind closed doors, but police officers who may be our neighbours, friends, and acquaintances. It is hard to see it stopping at facial recognition against a watchlist.

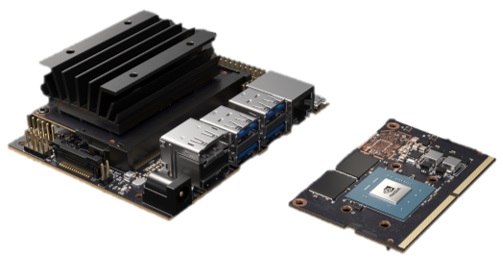

NVIDIA Jetson Nanos are only a little larger than the famously credit-card sized Raspberry Pi. With more police wearing high resolution body cameras it is near-trivial to integrate inference compute hardware like the Jetson into these device’s streams.

Robocop-style heads-up classification and profiling of individuals is no longer science fiction but the next inevitable small step. This can make life-saving tools for real-time cases like spotting hidden weapons in a moving crowd, but ultimately people reduced to cold hard statistics.

I can be proud of a Police force ethically using all tools available to protect and serve the public but I fear it will reduce the human element of policing.