I have been writing R code for more than 5 years which I write almost exclusively on Windows desktops. Some scripts I have put into production on Linux servers, commonly Raspberry Pis.

Where possible I try to maintain a single code-base which can run on both platforms.

Sympathy for the Devil

Having spent an amount of my youth writing computer game code I naturally became good at anticipating and handling issues interactive programs face when less controllable elements like “people” are to be accounted for. But this being my first R project to feature user interaction, beyond visualisation, there were naturally a number of new exceptions I did not initially foresee. Some of which took a tremendous debugging effort before realising their annoyingly simple solutions.

It has given me an appreciation for the difficulties Skynet faced when sending a robot back in time to kill Sarah Connor. I originally though it unrealistic an all-powerful networked system like that could fail to design a simple single-task robot. But how was the Terminator to know there would be more than one Sarah Connor? In a vectorised language like R when you call a function on a list you get a different result to calling on individual elements of that list.

And what if an apartment, or a data.frame is empty? Should we know to skip it or peruse an alternate course of action. It is no surprise the Terminator fell into the deepest depth of the exception handling code from the outset.

Check install exit codes

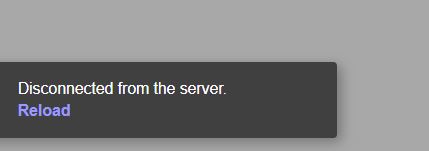

In my experience the most common cause of the dreaded “Disconnected from server” error is trying to load something that cannot be reached, causing the R program to crash. We can spend ages checking recourses are in the correct directories but is often simpler, if the disconnect-error is straight away it is often an R package that cannot be reached and not installed on the server.

The simple solution here is to check the packages are installed, even if you know you installed them. A Linux server should be a lean machine, only packages required for the server’s workload should be installed so many of the packages taken for granted on a desktop deployment will not be there on a server image.

The exit codes of installing R packages are very good a guiding you through what is needed. For example here:

------------------------- ANTICONF ERROR ---------------------------

Configuration failed because openssl was not found. Try installing:

* deb: libssl-dev (Debian, Ubuntu, etc)

* rpm: openssl-devel (Fedora, CentOS, RHEL)

* csw: libssl_dev (Solaris)

* brew: openssl@1.1 (Mac OSX)

If openssl is already installed, check that 'pkg-config' is in your

PATH and PKG_CONFIG_PATH contains a openssl.pc file. If pkg-config

is unavailable you can set INCLUDE_DIR and LIB_DIR manually via:

R CMD INSTALL --configure-vars='INCLUDE_DIR=... LIB_DIR=...'

--------------------------------------------------------------------Using Ubuntu, this is easily fixed by installing libssl-dev from the Linux shell

sudo apt-get install libssl-devThat being said, always check your exit codes! One particularly nasty one I had was installing the R package RMySQL. Having already installed my application on the Raspberry Pi knew I had to install libmariadb-dev & libmariadb-lgpl-dev and confidently installed these. Too confidently I did not notice RMySQL had a non-zero exit code. “Disconnected from server”. Agh.

It failed as unlike my Raspberry Pi deployment, my AWS database is on a different node than my AWS compute instance. Appropriately it did not have MySQL server installed but MySQL client.

Though libmariadb-dev & libmariadb-lgpl-dev did indeed install, what I needed was the client packages:

sudo apt-get install libmariadbclient-dev -y

sudo apt-get install libmariadb-client-lgpl-dev -yI said it twice before but it bears repeating: check your install exit codes.

File Paths & Leaflet custom tiles

I have known about the key differences when interacting with a host’s filesystem between Linux and Windows for many years. In R, in most cases we can avoid issues by using base-R’s file.path() constructor avoiding the forward-slash/back-slash difference.

My application uses Leaflet, an interactive mapping library, but uses my own custom generated mapping tiles instead of real-world provider tiles. The tiles are stored on a the local filesystem and in order to be served to the shiny web app need to be “published” via a resource path:

addResourcePath("my_tiles", file.path("path", "to", "tiles"))Easy enough so far.

This allows the tiles to be addressed as a URL from within the Shiny app, which should allow them to be displayed using leaflet with:

leaflet() %>%

addTiles(urlTemplate = paste0("/my_tiles/", vars$current_tileset, "/{z}/{x}/{y}.png")Works fine on Windows but on Linux no tiles were displayed on the map. It took me a long time to realise that the issue was the leading “/” in “/my_tiles/”. In Linux of course “/” is root. Leaflet was then not looking for the resourcePath “my_tiles” but looking for the directory “my_tiles” in root. This directory did not exist, but even if it did Shiny & Leaflet would not have been allowed to access it.

The solution was as simple as removing the leading “/”:

leaflet() %>%

addTiles(urlTemplate = paste0("my_tiles/", vars$current_tileset, "/{z}/{x}/{y}.png")To my surprise this works both on Windows & Linux.

Going forward: Containers?

Are containers the better solution to my problem? My day job is in an area of IT based on raw performance from bare-metal. Containers are only now starting to be seriously on my radar.

There are a number of different containerisation technologies, with different objectives. Docker is a leading container technology focused on portability and application independence from underlying infrastructure.

At a first glance this would seem ideal for my multi-platform perils.

A key reason why I write in R because I can write fast in R. Particularly when using Rstudio it becomes effortless to both write and check what is happening. I fear containerising will be too large an impact on my simple workflow. If you have experience in this I would be interested to hear it.

I haven’t containerised because I haven’t needed to but I see my hobby-projects are becoming significantly more complex that perhaps I need to invest in skilling-up to a more manageable multi-platform development ecosystem.