My aim is to develop a system to spot specific Lego pieces.

Training Data

Convolutional neural networks (CNNs) have emerged as the leader in image classification AI algorithms and in all likelihood will be what my brick-finder application will be built on.

When training a CNN, we need a dataset consisting of images together with a means of identifying what is in each image. Simplest CNNs will just identify what is in the image, i.e. bricks, cars, flowers etc.. My use case needs to not only identify what bricks are in the image but where.

There are two leading methods of labeling where in an image objects occur, bounding boxes and input masks.

Bounding boxes are easier to compute with as they consist of simply 4 numeric values (y-min, y-max, x-min, x-max) which can be thought of as two opposite corners of the square. Input masks are both harder to label and more intensive to compute as the required raster or shape objects require much more data to define.

If you have used the tag-bricks part of my site you will notice I have used neither of these. But I have reasons, and I have a plan.

Points

My project uses simple points to identify objects in a 2D image. Only two numeric are used to define them (x & y coordinates) and they can be input with a single mouse click.

Simplicity is key to my implementation. It must be simple to tag objects and as frictionless as possible. The nature of my images means the user may need to zoom in and pan around to spot and tag objects. These actions use two natural mouse interactions, being the scroll-wheel and hold-click.

Many existing tools use an array of hot-keys to draw boxes, which although great for professional tagging

How useful are points?

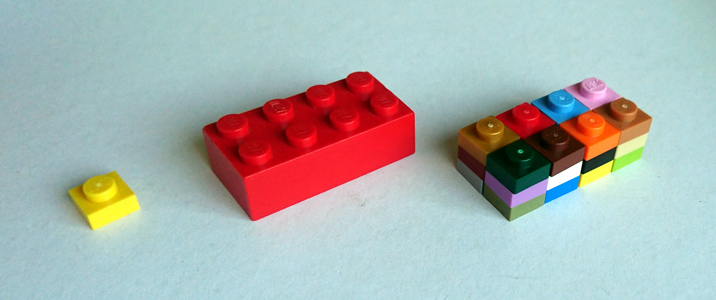

Lego pieces vary significantly in size. If we look at two of the more common pieces, a 1×1 plate and a 2×4 brick we can see the brick is twice the depth, three times the height and four times the length of the plate. You can fit twenty-four 1×1 plates in the space of one 2×4 brick and that isn’t even the largest brick.

A fun edge-case test of any model I create would be to see if it recognises the brick of plates as plates or as a brick.

Bounding boxes and input masks both have a significant advantage over 2D points in that they both capture the relative size of the observation. As such we can be more confident that the features detected within their limits contribute to the identification of the part.

Segmentation

I studied computer vision at University long before neural networks became mainstream, from which I still possess some understanding of geometric image feature extraction. The field has advanced considerably since then, one approach would be using super-pixel segmentation. Here pixels are clustered into a smaller number of super-pixels which are easier to compare.

I will still need to write a function to compare neighbouring super-pixels to work out what super-pixels combine to make a Lego piece segment, but this approach shows promise.

Essentially I will generate a set of segments, and then set about labelling those segments. Once segments are labelled, they can either be used as input masks or the extents of them used to generate bounding boxes. This automated approach may not be as accurate as human-tagged labels but my hope is they are accurate enough that sheer quantity will make up for lack of absolute accuracy.

Which is better?

Once sufficient training data has been captured I would like to compare different labelling methods to answer this. If you have an opinion or tips then please comment below!

I imagine version one will be based on bounding boxes as it is straightforward to simply re-train an existing model on my data, and high-performing bounding-box based models are aplenty at the moment.