In the weeks since my last post I have gained more knowledge of transfer learning and have started to implement it beyond object classification and into object location detection. Although it seems a simple evolution, I was surprised just how much more complicated and how much more pre & post processing is needed to achieve results.

But first, a correction

In my last post I identified what I though may be a glitch in matplotlib where small image fragments were being distorted, however I later discovered this was due to data augmentation. I am familiar with data augmentation and have implemented basic examples in TensorFlow based experiments before, flipping & rotating image data to generate “new” observations from the same data, but it was a surprise to me that FastAI does a lot of it for you.

Using FastAI’s get_fransforms() function with no parameters, it will as standard to a range of data augmentation techniques including flipping horizontally and warping the image to make a new observation appearing to be taken from a different location.

Warping however does not work well for my data. My photos of Lego have a much shorter focal length than typical images of objects in the world and warping starts to break the straight-lines used to identify pieces.

Data augmentation is a great thing and I’m thrilled FastAI have implemented it for me. I settled on the following for my data which yielded better results that my earlier post:

get_transforms(do_flip=True, flip_vert=True, max_lighting=0.1,

max_zoom=1.05, max_warp=0., max_rotate=90)

This removes the standard “warping” but does flip & rotate to essentially generate 8 significantly different tensors for each image. (Though many more slightly different samples from different zoom & lighting simulations etc..)

Find My Bricks

I used sample code from FastAI’s pascal notebook which uses the PASCAL Visual Object Classes dataset, adapting it to suit my data. Getting this code to run highlighted to pace of which AI development proceeding, despite being from 2018, getting this code to run in 2019 required several older packages to be installed.

Installing FastAI v0.7 was itself simple enough, but the code raised a lot of errors which needed to be handled. The most puzzling was a type-error in numpy:

~\Anaconda3\envs\fastai\lib\site-packages\numpy\core\fromnumeric.py in _wrapit(obj, method, *args, **kwds)

45 except AttributeError:

46 wrap = None

---> 47 result = getattr(asarray(obj), method)(*args, **kwds)

48 if wrap:

49 if not isinstance(result, mu.ndarray):

TypeError: loop of ufunc does not support argument 0 of type float which has no callable rint methodI’ve always considered numpy a rock-solid package unlikely to ever significantly change, but google-fu revealed fastai-0.7 requires numpy <=1.15.1. I use conda virtual environments so I am quite happy to experiment rip & replacing package versions, thus a quick call of:

conda install numpy=1.15.1downgraded numpy and packaged depending on it, fixing the error.

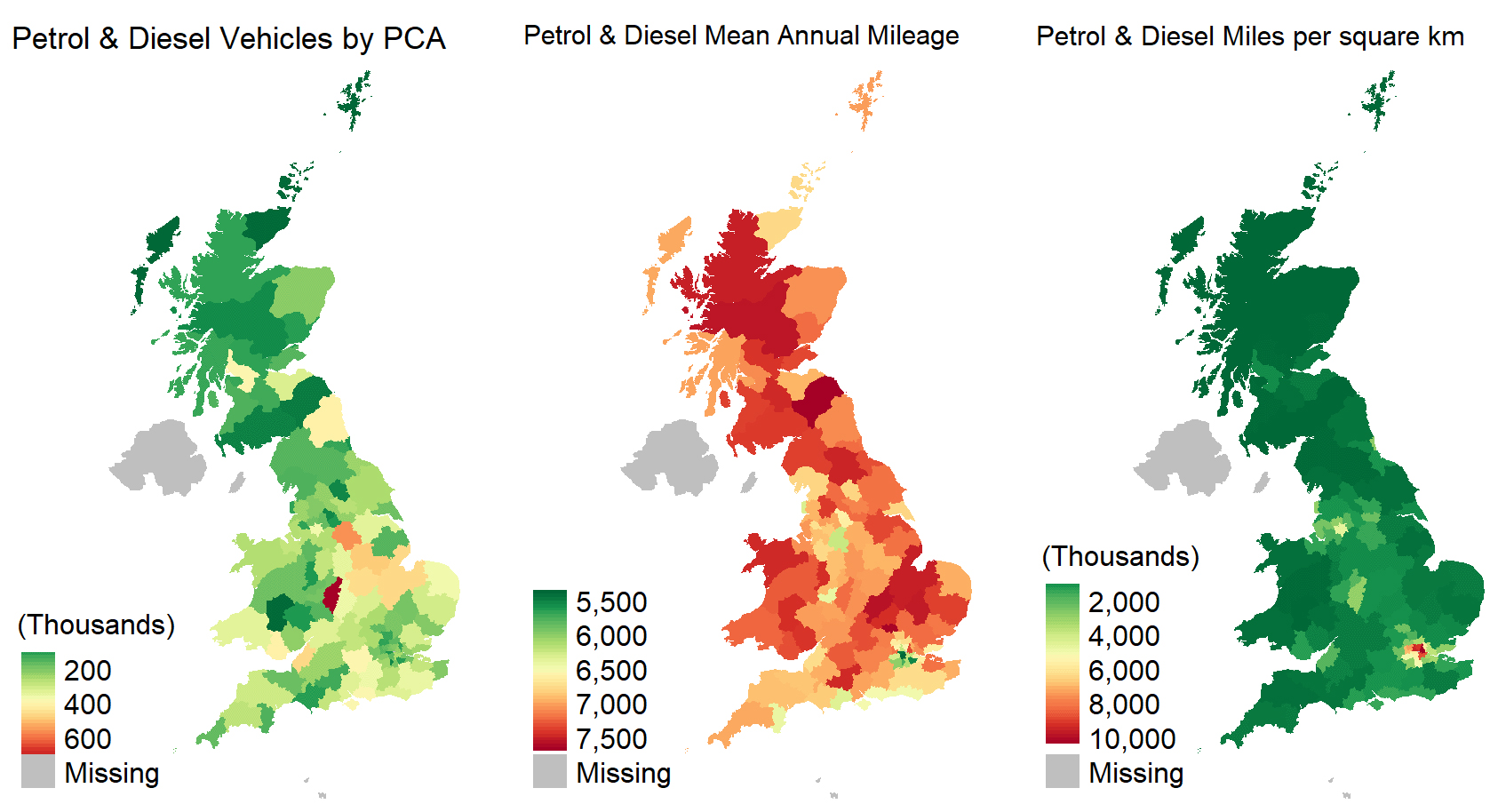

Data Prep

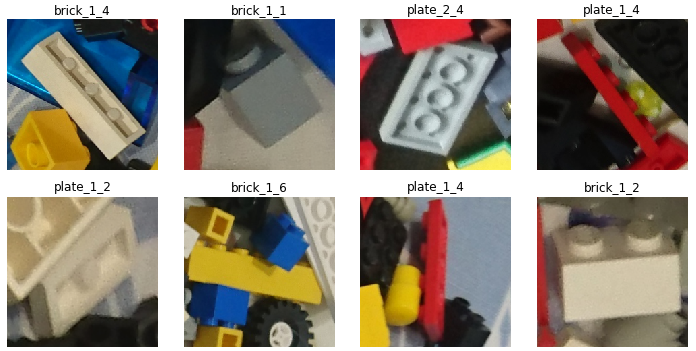

A major difference between my data and that used in the sample code is mine has a very small number of images each with a large number of detections – some having more than a 100 detections. Not wanting to diverge too far from the sample code at this point I split my images down in to smaller squares, which gave me a dataset of ~400 images, each with typically 2-5 detections in them.

Initial model

Using the same parameters in the sample code, I was able to train a mode based on a pre-trained resnet34 model to achieve initial results which were… well terrible. The below based on out-of-band validation data shows the initial model often identifies no pieces, or where it does it is with a very low confidence and usually the wrong piece.

Whereas the multi-classification results are so far not great, we should also remember it is not a true reflection of my target use case of finding bricks.

Adaption

The model returns a huge number of predictions, very few of which make it to the above plots. The sample code does a great job of taking these, finding the highest probability predictions and removing overlapping potential duplicates. I extended this code to instead take the predicted locations for a target class, and return the highest probable results to plot.

In the below image I have cherry-picked the standard 4×2 brick as one of the more common detection in my dataset which returns the following:

There are still a lot of miss-classifications, but model is starting to find the target pieces.

As my understanding of deep learning develops I hope to be able to improve the model, however I am also hoping more data will in itself yield a significant improvement. The VOC dataset used in the sample code has about 2500 observations spread over 20 classes, whilst my dataset currently has 1300 observations over 41 classes.

On those numbers alone it is unlikely my data will achieve a similar accuracy however my dataset is growing and after these experiments I have increased confidence in eventually building a model to find my bricks.