The year 2009. Media dominated by reports of the H1N1 Swine Flu pandemic. Humans go through that relatively unscathed but a bad year to be a pig. A big year for me too. Though not mentioned in the footnotes, 2009 was the year published my first computer game: TabeBALL, a toon-style table football game on the Xbox 360 Indie Games Channel.

This time round, the pandemic freed up a lot more time for me. Dusted off my programming knowledge, found C# still lingering in my finger-tips (though now slower on the keys) and have finally published a sequel, this time round in VR. Available for Oculus Quest via SideQuest or itch.io

I find VR amazing. Though consumer technology is not yet at the level to fool the eye, I have enjoyed a number of experiences which are so engaging that disbelieve can be suspended temporarily fooling the mind. I’d like to someday create similar experience, in the mean-time here I share some lessons I learned developing my first VR game.

Use Store Assets

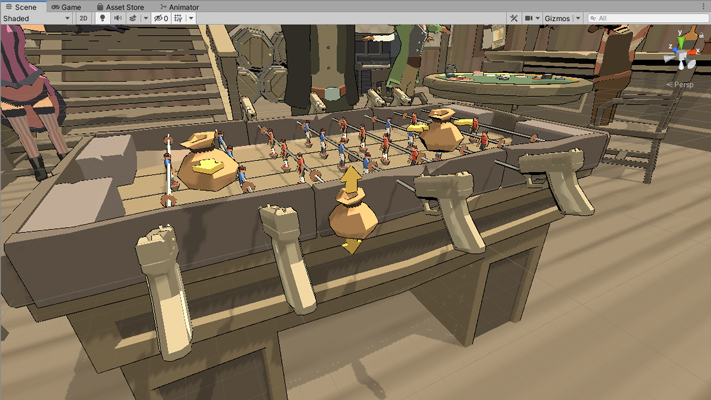

I touched on this in my earlier post, but the current marketplace for bought assets is very developer friendly. As long as you do not expect your project’s art to be unique you can easily acquire a large library of 3D models, animations, textures and sound effects for almost no money.

In 2009 this wasn’t the case and I made all the assets for my first few games but when I compare all the time I invested making those to what £20 can get me on Unity’s Asset Store it becomes a no-brainer.

Your game’s art is the first and often only thing a potential player sees. Expectations are higher than they were in 2009 and you art can simply not look amateurish.

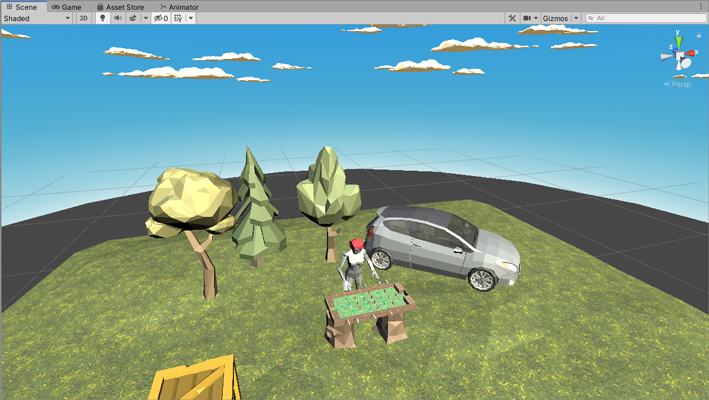

Don’t try to mimic reality too much

Instead, accept the limitations of VR and use them to your advantage to make your game more intuitive and lower effort for the player. For example it is hard to roll a table football handle when you’re holding a touch controller as you can’t roll it between your fingers easily. But the handle isn’t really there so there is no reason why you have to roll it. Instead I put “pistol grips” on the handles to give the player a que how to hold them and implemented a virtual-gearbox, where you don’t need to turn the handles very far to get full rotation of the rod.

Similarly the table isn’t really there, so there’s no reason a player can’t just drag it to a position where its easiest for them to operate it. Ultimately as frictionless as things can be for the player the more immersive it becomes.

Virtual reality is well suited to things that can’t be done easily in reality. In my opinion the skate-park level in TabeBALL 2VR is much more fun than the classic game, but in reality that many ramps will have you picking the ball up off the floor more often than playing. And spontaneous multi-ball simply isn’t possible in the real world.

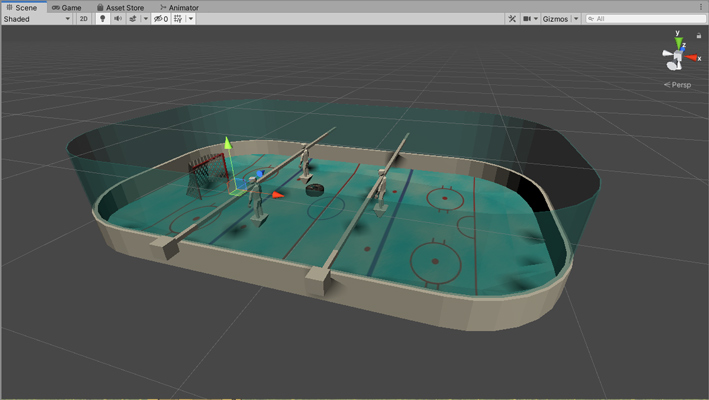

Accept when things don’t work and try something else

I knew I wanted some un-realistic tables. A very early prototype had an ice hockey table, using a zero-friction surface and a puck instead of a ball. No matter how much I tinkered with it, it just didn’t play “right”. It was hard to make the players pivoting at the waist hit the puck. I tried making the puck taller but then it wasn’t hockey. I tried making the players spin around their zenith like table hockey, but then it wasn’t TaebBALL.

Test it, learn from it, move on.

Make the scene alive

There are two main aspects here, sound and movement. Movement is obvious but without both it feels like you are just in a model.

Game engines are great for sound in that they can simulate and mix the sound sources for you into a stereo sound to give a decent impression of where individual sounds are coming from. Even basic sounds can combine to create rich environments. In TabeBALL2VR I have collision noises coming from the ball’s location whilst other ambient sound/music comes from other sources to give a 3D sense to the world.

Monitor performance early

Having developed on Xbox 360 I was already well aware of the need to test on your target platform early. Work out what it struggles with and what it excels at to get the best experience. Xbox 360 for example struggled with garbage collection so variables had to be declared in loading screens only and re-used to prevent frame drops. Meanwhile alpha blending was practically free so there was a huge amount you could do with tesxure manipulation and HLSL.

As a mobile device Oculus Quest has its own limitations and I was surprised both at what it couldn’t do and what it could do. The first prototype scene had almost no models, no touch support, entirely free assets. The high definition textures with bump-maps looked great but the frame rate was just too low to be playable.

Experimentation found best performance from as few textures as possible, maximum 4, and these should be low resolution 256 – 512 pixel squares.

I think real-time lights are important for VR, real time shadows more situational. For TabeBALL 2VR I found real-time shadows greatly improve a players judgement of where the ball is on the table and weighed this more important than more vibrant background detail. For bigger scenes interacting with objects all over a room-sized virtual environment I find shadows less important.

I have learned a huge amount in making this game but I’m always keen to learn more. If you think there’s something I’ve missed or have your own feedback please do drop me a message or leave a comment